Can’t wrap my head around it, honestly. It feels like every year is faster than the last (my mom says it’s just aging, lol), so it’s about time we discuss Q1 trends to watch in EdTech, since there are still 2 months ahead of us ;)

Unsurprisingly, some of the trends we covered in our 2025 Q4 edition, like AI in education regulations and the standardization of verified digital credentials, have continued to shapeshift well into 2026’s Q1. In fact, the past edition inspired the OctoProctor team so much that we decided to make TTWE a staple. Yup, even gave it an acronym already.

This take covers both the persisting transformations along the new B2B tides that are likely to influence how everyone in the education industry navigates through 2026. Regardless of whether you like the AI-fication of education, buckle up tight – it’s here to stay!

EBAL is a micro-trend within AI-powered adaptive learning that aims to “read the room” rather than just reading clickstreams. I also scoffed at the start of my research because humans who fail to read the room, trying to teach a machine to do so, is iconic. Nonetheless, EBAL systems adjust content and pacing based on emotional state and psychological traits, aiming to serve the right-sized activity for that exact moment. For test takers, that means prep that respects bad days, brain fog, and stress spikes instead of punishing them. Doesn’t sound as bad anymore, right?

Researchers such as Gutierrez et al. and Salloum et al are experimenting with models that blend classic behavioral data with softer signals such as self-reported mood, frustration indicators, or interaction patterns. Instead of pushing harder problems every time a learner gets an answer right, EBAL systems can serve consolidation tasks when focus drops, or switch modality when someone shows signs of overload. Early pilots sit mostly in tutoring and exam-prep products, where high anxiety meets tight deadlines.

.png)

For learners, the benefits of emotion-driven learning systems promise study plans that flex with real life: illness, caregiving, shift work, or simple burnout. When prep becomes less punishing and more emotionally literate, we see fewer students hitting exam day already exhausted. For institutions and employers, that matters for fairness: two people can learn the same objectives with very different emotional baselines, and EBAL gives you a way to level the prep field without watering down standards.

If you buy or build adaptive tools, start by mapping where emotional signals would actually change a decision (e.g., offer a lighter block, insert a break, switch to guided explanation). Favor transparent designs that rely on opt-in signals over opaque webcam emotion scoring, and insist on clear data retention and opt-out options.

We see EBAL as firmly belonging in learning and prep, not in the exam room itself. If learners arrive calmer and better regulated because their prep respected their emotional bandwidth, live and automated proctoring becomes less confrontational for everyone. Our job of keeping the assessment environment predictable and humane does not include guesswork about how someone feels while they are being graded.

I also expect less memory friction and would love to see EBAL more in corporate learning. If successful, digital transformation in the education community will crown emotion-based adaptive learning as the next hottest thing after gamification.

(1).png)

Micro and nano learning – 2-15-minute learning blocks – have become the default for corporate and adult L&D, and they’re now seeping into exams across education and compliance programmes. Short videos, single-concept cards, and tiny practice sets fit into public transport commutes and all kinds of breaks. Think about a certain green bird app that has also been gaslighting a big chunk of the world (early EBAL, was that you?). The learning side is maturing fast from speedrunning Spanish for funzies with friends, but the assessment side is still catching up.

K-12, HE, and professional training borrowed the format from corporate L&D, building micro-courses and micro-credentials stitched together from dozens of tiny units. Assessment is typically followed by equally small checks: five-question quizzes, vocabulary puzzles, drag-and-drop activities, or fast visual-recognition tasks scattered throughout a course, rather than the end-of-module Armageddon.

Micro- and nano-learning suit busy lives, but they raise questions, especially regarding credibility. Tiny assessments are great for building confidence and providing quick feedback, yet they rarely include guardrails against leakage or cheating. Question pools get recycled, screenshots travel in group chats, and it’s hard to know whether repeated micro-passes add up to durable mastery or just short-term recognition that has ruled gamified language learning. You can’t trust a quick check with dubious evidence for a licence or a university credit.

When designing microlearning, decide which checks are purely formative and which should carry real weight. For the latter, invest in larger item banks and occasional, more controlled checkpoints hosted on exam delivery platforms. Align micro-assessments with a few sturdier summative tasks, so you can actually see whether all those tiny wins translate into stable skills.

We see micro and nano learning as fertile ground that still lacks equivalent rigor on the assessment side. Proctoring every five-question quiz using AI-powered assessment tools shouldn’t even be a question. Absurd. OctoProctor is keen on helping institutions and companies create select, higher-stakes checkpoints within micro-learning journeys that are identity-verified and well-documented, so that frequent, small learning moments can eventually transform into credentials others can trust.

AI teaching assistants are shifting from sneaky extra tabs to built-in LMS features. Students now meet course-aware assistants inside Canvas, Moodle, Blackboard, and custom portals. For schools and universities, 2026 AI’s housewarming in LMS changes how help, feedback, and integrity boundaries are defined in learning methodology.

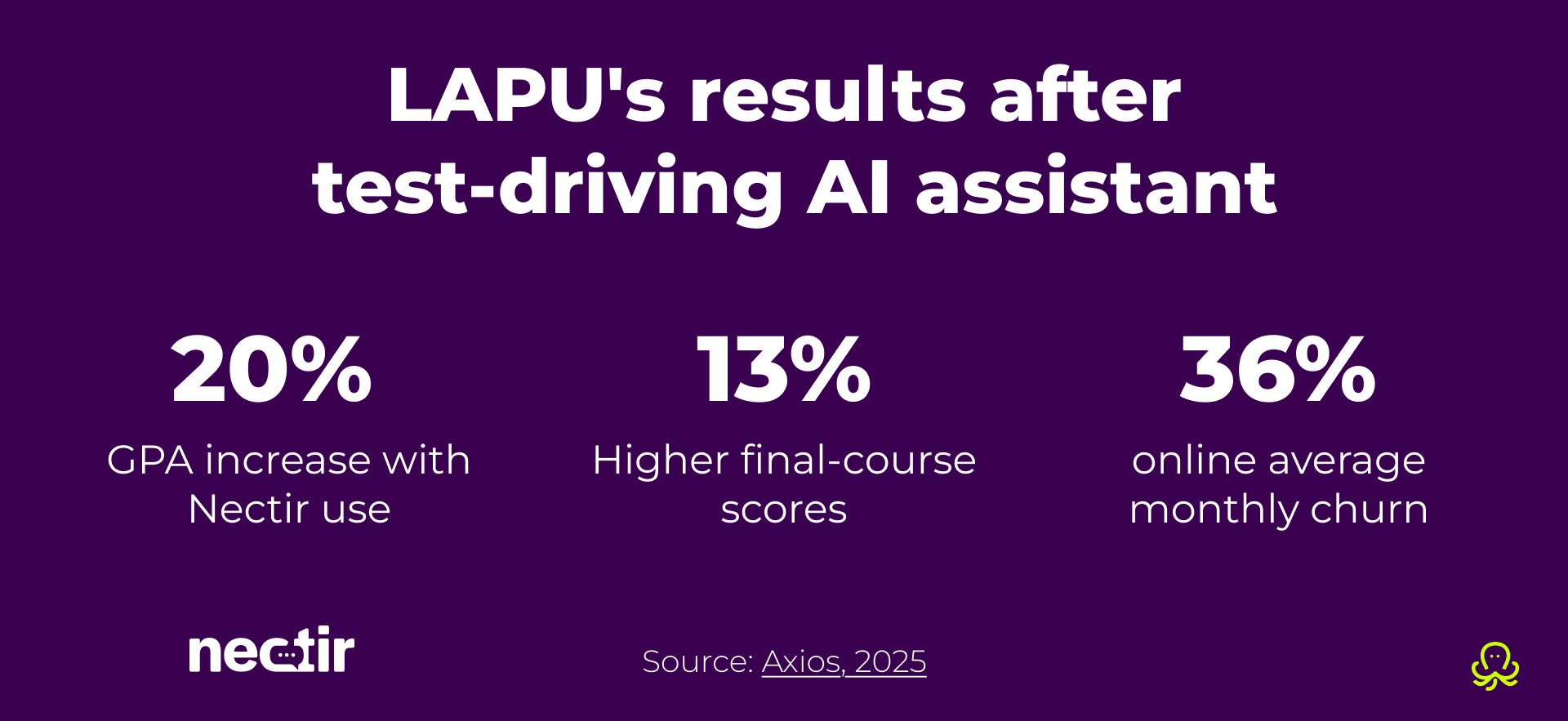

Over the past year, vendors and institutions have flirted with embedding AI assistants in LMS that can see the syllabus and lecture notes. In secondary and tertiary education, national pilots and grant-funded projects in the US and Europe now specifically fund AI support within existing platforms. Thus, Axios reports that California Community Colleges partnered with Nectir to roll out AI assistant for 2.1 million students. Meanwhile, AI coaches stay closer to documentation than to teaching in corporate L&D as of now.

Once the assistant sits inside the LMS, an answer given by the LMS assistant feels more “official” than something copied from a general chatbot. Educators have to decide if the assistant may hint or generate practice prompts and where it must stop – writing full assignments and leaking exam content. The more tightly integrated the assistant, the more it shapes study habits and expectations around “always on” help. Policy and pedagogy can’t look away from AI in education, especially when it comes to underage persons.

First of all, choose vendors whose privacy-preserving EdTech design is their value proposition. Second, define which LMS spaces the assistant can see and what it’s allowed to do within those spaces. Write down and publish simple boundaries for staff and learners, then test them in a few courses rather than flipping a global switch. Pair AI support with at least a few assistant-free checkpoints where students must reason unaided for verified learning outcomes.

Instead of a constant LinkedIn war on AI, some educators decided that if they couldn’t win over the uprising, they would lead it. For OctoProctor, LMS-native assistants make the line between learning and examining even more critical. AI learning analytics are too young to hail or burn Skynet on a stick definitively. However, once the closed-book exam starts, invigilation tools must enforce a clear “no assistant” zone where human-authored work and transparent monitoring are paramount. And, if AI assistants in the same space as your exam is your hardline, look into our SCORM-based solution ;)

You thought I lost the laws in the previous trend? In highly regulated industries like proctoring, we may forget about legalities only when asleep. As expected, AI in education is shifting to detailed rules, especially in K-12. By early 2026, US schools and colleges are adjusting to federal compliance requirements in education technology, a patchwork of state laws, and parent-led scrutiny of anything “AI-powered” that touches children’s data or grading.

In 2025, the US Department of Education issued a Dear Colleague letter that ties federal funding to “responsible AI” practices in education. States like Idaho, Ohio and Texas are layering on their own AI and student-privacy bills, some requiring district-level AI inventories, others tightening consent and data-sharing rules. Draft federal bills such as the LIFE with AI Act focus explicitly on K-12, framing guardrails for AI-mediated instruction and student data use while giving parents stronger rights to say no.

For institutions, this means AI decisions now sit within policy and audits as opposed to IT enigma experiments. K-12 leaders in particular must assume that any AI touching instruction or assessment of minors will be questioned on safety and bias. Data governance in EdTech was already tight, but for K-12, you should look no further than local solutions. Vendors that work in or on the periphery of schooling should expect longer procurement cycles, more detailed security reviews, and explicit questions about how parents can opt in or out.

Build and maintain a simple AI register: which tools you use, for what purpose, with which data, and where humans stay in the loop. Translate legal language so teaching teams and parents can navigate without drowning in legalese all day.

We already treat AI as optional support. Institutions can pick from AI-assisted, automated, or fully live proctoring, and keep humans in charge of final decisions. As AI legislation tightens, that flexibility becomes less of a bonus and more of a baseline expectation for any assessment partner.

Skills passports consolidate learning and employment records (LERs), digital badges, and, in Europe, EU identity wallets into a single portable layer of proof. Instead of scattered PDFs and screenshots, people move through education and work with a verifiable trail of what they’ve learned. Sounds like a belated dream come true for hybrid learning ecosystems. Still, once that trail becomes machine-readable and shareable, the quality of the underlying accreditation and digital exams suddenly matters a lot more.

LER pilots in the US and large-scale European projects around the EU Digital Identity Wallet are moving from concept to infrastructure. Universities and corporate academies issue digital badges tied to short courses and micro-credentials. Employers start to accept these records as part of hiring and promotion decisions, rather than asking only for degrees and CVs. The result is an emerging layer of “skills passports” that sit above individual platforms and institutions.

When a credential can be verified with one click, the obvious next question is: verified against what? If a badge or LER entry is going into a wallet that travels across borders and sectors, its metadata – skills, level, standards, issuing body – and the integrity of the associated assessment take centre stage. Products that can issue verifiable credentials by design will find it easier to plug into this ecosystem. And if there is room for abuse, someone will definitely try to take advantage of it. Ironically, weak exams will produce weak skill passports.

Map which of your assessments produce outcomes that should live beyond your own platform. For those, tighten item design, blueprinting, and result metadata so they can be turned into portable records. Explore how your stackable credentials could be exposed to LER systems or EU wallets, and check whether your assessment logs are good enough to stand behind them.

Skills passports are an opportunity for everyone, including proctoring. For us, that means pairing secure identity verification, stress-free live or automated monitoring, and clean logs so institutions can confidently label credentials as “backed by proctored assessment.”

Will fake digital badges in education persist after LER systems are standardized? What haven’t humans faked yet?

And honestly, EU identity wallets should be a global standard, given that 1 in 67 people worldwide is forcibly displaced due to conflict, persecution, violence, and human rights violations. Paper is too wasteful and unreliable to keep ruling EdTech in 2026.

Many of the B2B Edtech trends that I covered sound predictable, and it is great. Not every quarter should be a rocket launch – it would have been too costly and unsafe if we look back at the history of space exploration. A change that is there to stay responds to today's needs. Diffused attention? EdTech offers us micro- and nano-learning. Neurodiversity has become a reality that education can’t ignore anymore? We’ve got EBAL to make learning less painful and more persona-aware. World is undergoing an unprecedented and accelerating period of global migration? Enter skill passports and identity wallets to keep talent and dignity at fingertips.

Proctored assessments in EdTech are yet again coded as part of the future of digital learning, and I am sorry to disappoint the opposition with that.

In the context of rapid changes and AI-fication of education, exams are not the imagined boundaries that keep people from learning or succeeding. On the contrary, exams help identify knowledge gaps where AI may blur the line between personal and collective knowledge. OctoProctor helps verify growth and supports mobility with a device-agnostic tool that requires minimal bandwidth of 256 kbps and produces clear, auditable reports.

Until the next TTWE, my fellow EdTech changemakers, explorers, and enthusiasts!

Your new learning methodologies will surely look amazing in the employees’ skill passports. You are just a step away from finding out how proctoring can help your organization deliver reputable, shareable assessments. Just sayin’🐙

Contact Octo!AI ethics in EdTech is a question that has a subjective answer. Does your AI learning build on fairness, transparency, accountability, privacy, and human agency? If we consider having a human review an AI-powered OctoProctor exam session, then yes, this AI use is ethical. But uploading someone’s work to LLMs without their explicit permission to train an AI learning assistant is unethical, for example.

Radically – no, but I would definitely keep LERs on the radars given the convergence trend in EdTech. Maybe the EU Digital Identity Wallet will ease identity verification in online exams.

If skills or compliance are related to the nuclear, military, or space sector, you might consider enterprise-grade exam security. But for moderate/low-risk sectors, I recommend simply proctoring assessments.

Further exploration of AI in education and learning that centers on personal stamina and schedule gaps, aka EBAL, and microlearning. Expect more nano-learning pop-ups in enterprise learning platforms, too. And, of course, expect the rise of demand for secure online testing for enterprises, given that AI learning assistants and compliance exams do not go well together.

The best way AI can change education is through emotion-based adaptive learning. That is, if it finds a key to understanding the complex human psyche, which is often quite irrational. Expect apps that speak to you, analyze your personal data, and keep it, like a psychologist. The most widely used and normalized analogues I can reference are AI-based women’s health or sleep trackers.

No. Adaptive learning is EBAL’s tried predecessor, featuring continuous learning diagnosis, personalization, and engagement, but no emotion-based check-ins or adjustments.

As long as the topics covered by microlearning are actually micro – meaning concise – yes, it significantly boosts retention, engagement, and motivation by reducing cognitive load and making learning an integrated part of daily routine. Also, I would not trust microlearning with complex topics that need significant synthesis.

So far, microlearning assessments are simple quizzes that do not carry any exam integrity in 2026.

Speaking of AI teaching assistants replacing tutors in the US – highly unlikely, at least within K-12 education. But the ethics of private establishments are solely up to them, and so far, there are no strict regulations explicitly banning adults from choosing AI teaching assistants over conventional tutors.