USA – the three letters that embody the dream for many international undergraduate applicants. Top-rated institutions with state-of-the-art facilities that scream privilege, student clubs that are featured in Hollywood movies, and students – diverse yet curated. Sometimes, I wonder whether showing the less glamorized truth of the US college application process would have discouraged prospective international applicants. Undeniably, being a student in the USA has changed drastically since the naughties, and at times, the pressure on admissions is almost violent, proportional to how close a school’s rankings are to the top.

Local US students have been struggling to demonstrate their character through a wide range of extracurriculars: charity, community work, sports, hobbies, theatre, volunteering, in addition to excellent grades and a dozen entrance essays. Looking at Americans and trying to count their admission points that frequently lack AP courses, international applicants often feel desperate enough to doubt their high grades and throw themselves into an activity campaign.

Where does the integrity sit in the performative US undergrad entrance process? Are they searching for superhumans? Ready products to sell? But why are first-time undergrad dropout rates peak at 39% and post-graduation unemployment so high then? Why not stick to the applicant’s knowledge rather than easy-to-fabricate personality tropes, especially during undergrad admissions (not every 18-year-old knows what they're going to do in 4 years to begin with)? I have been asked these questions by prospects who have already lost their pink glasses, sometimes after a year into the “holistic admission” campaign.

This article will review and challenge the current state of affairs, because US undergrad admissions have long needed the wind of change.

Fall 2025 has seen a 17% drop in new international admissions at US universities, according to the BBC.

Let’s be realists. With funding cuts and tightened visa policies, US tertiary education is entering its local era. If it is hard for local students to afford tuition and not drown in student debt for the next 15 years, think about the bright minds from abroad. Yes, US undergrad diplomas have always been about the wealth of international applicants, but some exceptional individuals relied on private and government funding to experience the American dream for the betterment of society. 2026 will be a much harsher year for international talent recruitment, and admissions will feel the pressure of choice.

“One-size-fits-many” blueprints can unintentionally under-measure what matters in your program, even when the approach is “valid” in a general sense. So, I propose that your admissions adapt and localize. But first, let me walk you through what I think does not fit reality in the current state of admissions.

“The reasons that I have for wishing to go to Harvard are several. I feel that Harvard can give me a better background and a better liberal education than any other university. I have always wanted to go there, as I have felt that it is not just another college, but is a university with something definite to offer. Then too, I would like to go to the same college as my father. To be a ‘Harvard man’ is an enviable distinction, and one that I sincerely hope I shall attain.”

Written by John F. Kennedy in 1935, that essay most probably would not have earned the US 35th president admission to today’s Harvard. Ironic, right? The modern university essay has built a multi-billion-dollar industry around itself.

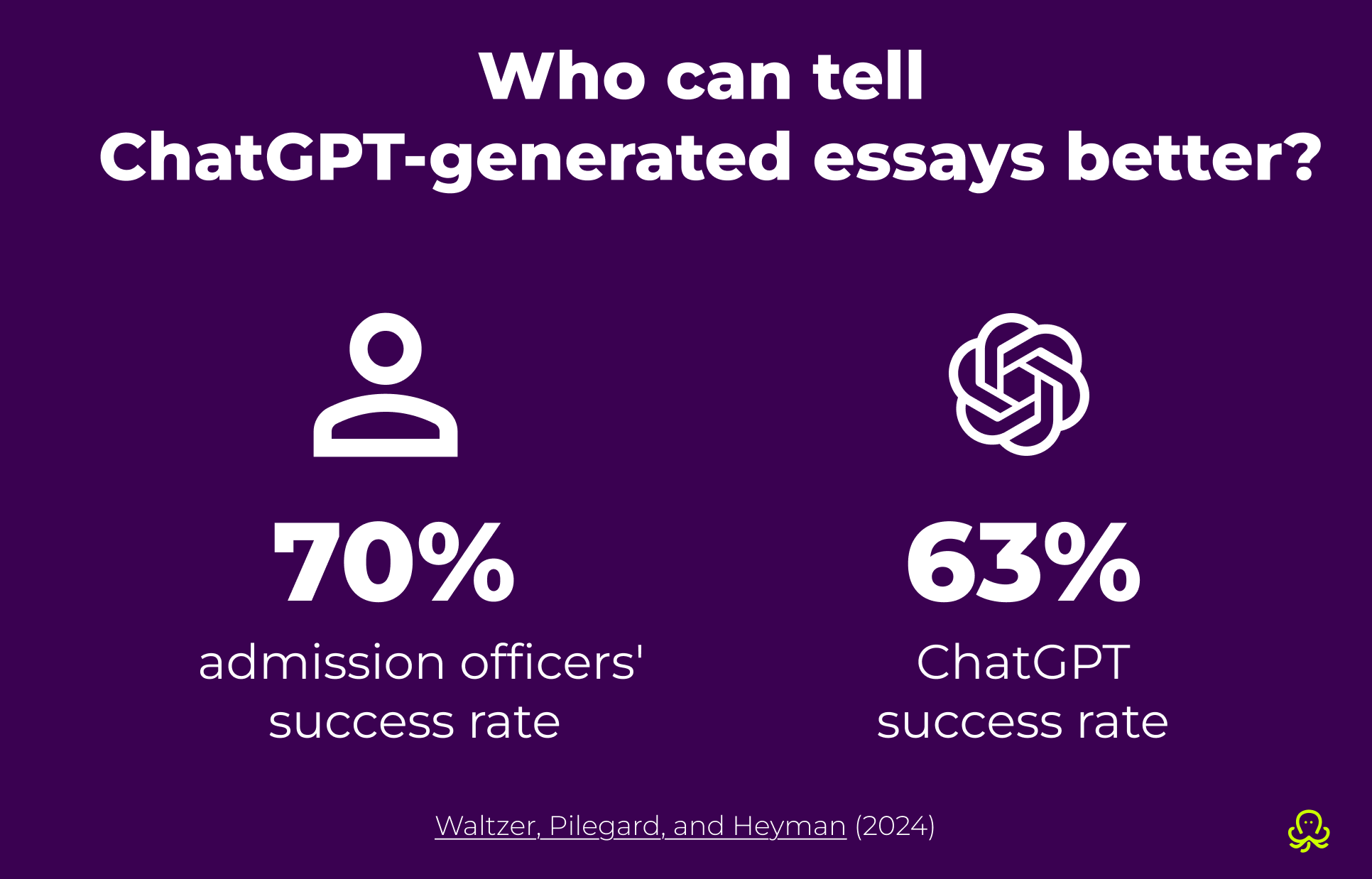

Generative AI and ghostwriting have made authenticity hard to verify. When you deal with entrance essays at scale, you lose your hunch towards what is human and what is not. Yeah, I know, I know, you, an admission officer, are not that easy to fool, but let’s look at the problem from my angle.

First, humans mimic one another, especially in communication. You could attribute buzzwords to different admission seasons each year. Since 2024, society has had a broader problem than social media echo rooms. You guessed it right, AI. Not only do users see AI-generated text in bulk online, but they also normalize it and, subconsciously, begin incorporating its style into own writing. Applicants have been losing their unique voices steadily, so have admission essays been losing their purpose. AI detectors are not a solution to this problem because they can flag actual humans (especially applicable to international applicants) who may have learned to write from… AI. Just like in the 80s and 90s, some learned English by watching TV.

US colleges themselves are now experimenting with AI screening of essays, extracurricular activities, and transcripts. Useful for triage and accelerating response times, particularly for popular institutions, but questionable in terms of rigor and prestige. Also, a bit hypocritical – you ask students to sign no AI pledges and then have AI read their essays (have you asked their consent, btw?). Choosing between a moody and tired human being and AI, whom do you think applicants would trust the most? Especially amid illustrious reports of AI bias in grading that are likely to continue to grow in number throughout the 2026 education AI-fication. And yeah, AI grading AI underscores how fragile the integrity has become on both sides.

Cornell research also shows AI-generated essays can mimic privileged stylistic markers, particularly those of white males from higher socio-economic statuses, extending existing equity and bias questions. The US essay admission tradition increasingly reflects access to coaching or tools. Writing a 500-word essay for months is the least thing I would rely on to assess modern students’ independent writing or readiness to undertake a program.

More than 2,000 US colleges and universities will remain test-optional or test-blind for fall 2026, while selective ones are reverting to ACT/SAT dominion. I support ditching private-run standardized for-profit tests in the US unless they become truly standardized, owned by the government, and part of the curriculum.

Firstly, ACT and SAT test a narrow range of skills – math, reading, grammar – while missing creativity, critical thinking, and other talents essential for college and future professional life. Secondly, ACT/SAT, in their current form, are a multi-million-dollar oligopoly that thrives on inequality. Thirdly, SAT retakes are unlimited, while ACT caps retakes at 12 per lifetime – both are administered multiple times a year, which makes results and the superficiality of “competition” even more questionable. The ability to retake and superscore the test gives well-off students yet another advantage. Their privilege lies in knowing that a retake is always an option, making prep time management less stressful compared to those who rely on fee waivers and are less financially fortunate. A single composite score rarely maps to discipline-specific competencies. For placement and success in first-year sequences, alignment outweighs generality, which is why some prestigious EU universities administer their own admission tests. Finally, US standardized testing still hinges on physical test centers, even after the 2024 digital SAT rollout. Both US and international candidates in “test-center deserts” suffer from long, costly travel and missed sittings.

Another big issue I see with the SAT and ACT is cheating prevention and investigations. It is no secret that domestic and international test takers often receive scripts in advance. Some test results get cancelled by the administering organization (for example, country-wide score wipe-outs in Egypt and Hong Kong by the College Board), while others may go undetected. In other cases, administering organizations have been known to accuse face-to-face test takers of cheating based solely on undisclosed statistical analyses comparing one’s answers to those of the person sitting next to them, sometimes announced months into the application process.

A recent vendor-sponsored large-sample (100k+ students) analysis report indicates that TEAS correlates with early nursing success. Multi-institutional results, like those from Palmer and Rolf or Dunham and Alameida, on the other hand, still show variability by program and outcome. HESI A2 nursing entrance exam likewise shows predictive links in some settings, while other reports (and reviews) note uneven effect sizes or context-dependency. Validity often shifts with local policies and cohorts, which is a mess if we aim for fair admissions. Programs still need curriculum alignment and transparent appeals to avoid over-relying on a one-size score. TEAS and HESI test takers routinely discuss sold-out sites, booking weeks in advance, or paying more for online sittings, further supporting my point about the questionable credibility of current US standardized college admission tests.

“Kaplan-style” diagnostics and general math placement tools are convenient, but also dangerously easy to over-trust. If your biggest early attrition occurs at the gateways (Intro stats, CS0/CS1), then alignment should beat generality for your program admissions. Even within a single institution, a placement mechanism can shape who enters Calculus 1 and who persists in an engineering pathway (and it can do so unevenly across groups), which is exactly why placement strategy is a lever for retention and equity. When assessment items drift away from the curriculum objectives, students are tested on irrelevant content, complex but mismatched scenarios, or inappropriate cognitive levels. That kind of disconnect is shown to harm learning and motivation, because students stop trusting in your institution.

When institutions outsource high-stakes assessment work, they also inherit vendor process risk. A sharp illustration is the California Bar exam controversy: reporting described how multiple-choice questions came from multiple external sources, including a major test-prep vendor, and that some items were developed with AI assistance by a psychometric contractor – triggering conflict-of-interest criticism and a legitimacy backlash. Test-takers reported that the questions contained odd phrasing and unacceptable mistakes for an exam that gates one’s future.

Anticipating that question, no, only a few niche/private US colleges and universities own their entrance exams, and they often use the exam more as a minimum gate than as a high-stakes competitive ranking. Business is business, and not every international student aims for admission to the Ivy League.

A more widespread example of program-specific admissions is institution-run auditions and portfolio “entrance exams.” Nobody would question that applicants to Bachelor of Music degrees must complete a School of Music entrance audition in addition to the standard application.

Owning an exam does not equal reinventing the SAT with your logo on it. Exam ownership helps you reclaim the signal you actually need from applicants and build a delivery model that’s fair enough to defend and on-brand with your institution.

If your undergrads struggle in Calc I, Chem I, Intro CS, or academic writing, then your admissions screen should map to those gateways. Cliché, but your English department may be missing out on a literature prodigy because they are on a formal basis with algebra. University is where undergrads are supposed to narrow down and find their niche. “General aptitude” and the ability to pay for prep markers are useless both for their future with you and for the test-takers themselves.

The practice of administering own admission exams at EU institutions has been in place for some time, on top of state exams that students prep for and pass as part of their curriculum in their respective countries. Selective universities ensure that applicants from around the world can undertake the specific program and be part of a competitive cohort. It is rare for EU institutions to retest everything on top of an applicant’s diploma, which already includes passed school curriculum exams. EU universities are successful not because they are old, but because they recruit students who align with their brand, which includes pedagogy. Moreover, some countries, such as the Netherlands, significantly limit numerus fixus applications rather than allowing mass applications to numerous programs to prevent misalignment and application overflow during the admissions period. Applicants need to choose carefully first; only then do the admissions enter the scene and “certify” the alignment.

As a college application counselor, I have seen admission strategies consisting of 40+ universities. The aim in such cases is not to be admitted to your institution. The aim is to get admitted to the USA whatever it takes. Behind holistic application, the international applicant may not know your university’s values, labs, rigor, specialization, etc. Yes, the essay can name-drop a professor or two, narrate an entire campus map, chirp about how the applicant had been interested in that particular breakthrough topic your university leads – for years. But in reality, it was at best surface-level research. Professor names can be replaced, labs juggled, and vlog-style descriptions of “my day with [put your university name]” are also a few minutes of edits. If the applicant is attentive enough. But when such applicants arrive on campus, they struggle to integrate because they were never truly prepared for your institution. For that reason, South Korean admissions offices often conduct interviews with shortlisted applicants, a practice that helps ensure alignment alongside in-house exams.

Owning the exam should not be “everyone arrives to the gym at 7 a.m., including international applicants” scenario. That would be something similar to Squid Game, given the stakes and possibly limited program quota.

The hybridity of modern education is an asset: you can administer the entrance exam remotely without sacrificing integrity. Auto proctoring with human review gives you identity assurance, secure windows and parallel forms (with control over variants and content), and time-zone fairness that doesn’t discriminate against international applicants.

Beyond our minimal 256 kbps bandwidth specialty, OctoProctor lets you build respectful alternatives: non-biometric identity paths, accommodations that apply automatically, and a choice between an LMS integration and going indie with a SCORM player. No need to travel to another city/country to verify one’s readiness for the program; all can be done once, from the comfort of the applicant’s home.

When you own the exam, you own the rules. You can define what counts as a material incident, what evidence is reviewed, and how fast decisions are made, making it a part of your institution’s reputation. You can standardize an evidence bundle (e.g., timeline + logs + short clips + rationale) and publish an appeals SLA that enhances the positive applicant experience through clarity. And, as an extra mile for your brand, you can publish fairness metrics – incident rates by type, appeal rates, reversal percentages – because transparency is how you earn legitimacy in high-stakes admissions.

At scale, a well-run in-house exam program can reduce per-candidate costs, especially when you control item reuse and refresh. You can iterate new forms each cycle and update scenarios when curricula change faster, instead of waiting on vendor roadmaps. And the biggest payoff is analytic, such as item exposure signals and DIF monitoring, tied back to tangible outcomes like gateway-course performance and retention. Thus, a tailored university admission exam translates into the feedback loop that standardized tests can’t give you: whether your admissions gate is actually setting students up to succeed once they arrive.

Once you’ve accepted the premise – your entrance exam should reflect your curriculum – the real decision becomes: who owns what. The good news is that your institution has several scenarios to choose from, depending on your budget, admission flow, and timeline.

It’s the fastest option, and in my opinion, the most budget-friendly. You write the blueprint and items; a proctoring partner handles secure delivery, identity checks, monitoring, and support.

Why teams pick it: speed and predictable operating costs.

Tradeoff: you still need internal governance, but you are not building a proctoring operation from scratch.

This is the “we’re becoming a testing program” path, a very rare and long one, and I would honestly advise against it unless your Education and IT schools want a joint flagship project. In this case, you control everything – monitoring rules, evidence bundle, retention, support workflows, and the exam UX.

Why teams pick it: highest control over compliance posture, data minimization, and candidate experience.

Tradeoff: operations (security, privacy, accessibility, legal, incident review, and continuous test maintenance) and the sole amount of investment needed. You are also very unlikely to compete with major industry players if you ever hope to break even. Considering this type of headache? I dare you to begin by reading the ITC/ATP Technology-Based Assessment guidelines.

If you are unsure about owning content yet, but determined to run exams on your own, this option is for you. You keep a third-party test for comparability, but you deliver it under your preferred proctoring vendor.

Why teams pick it: a transition model, useful when you need a quick structure while building your own item bank.

Tradeoff: you inherit the biggest limitation of outsourcing, which is weaker alignment to your gateway courses, plus less control over item refresh/exposure practices.

The solutions I have offered are non-standard to America, and it is the point of this article. Proctoring entrance exams will not solve the corporatization of US tertiary education. Moreover, owning your admission exams may generate additional cash flow for your institution, depending on the direction and philosophy you choose. But it will also reclaim fairness and re-establish the prestige that should, foremost, lie in explainable, competitive academic excellence.

The constructs your curriculum requires may include discipline-specific skills, time-limited writing, basic coding, or lab math. When the assessment speaks the same language as the classroom, scores become interpretable: this student is ready for our first-year pace. And, what is even more critical for US international admissions, we will keep this student.

Implementing something new or different should not be a lonely path. The OctoProctor team is an integrity partner that will have your back, so how about we modernize your current admission process by adding proctored exams you are in control of

Let's talk!It always depends and should be checked with a specific university/nursing school. In many cases, yes, nursing exams are “outsourced” and then administered by the “admitting” institutions. For example, the Kaplan Nursing Admission Exam is administered locally or online with a remote proctoring vendor such as OctoProctor.

Back in the day, when US admissions were smaller in scope, US universities used to own their entrance exams. The standardized format emerged along with higher demand and commercialization of American tertiary education. Currently, US colleges and universities revisit standardized exams and their place in holistic admissions after a significant pushback in the 2020-2023. The biggest criticism is the duopoly of the ACT and SAT, and how their system creates inequality between wealthy and underprivileged test-takers by offering paying students multiple exam sittings in a year and by creating an entire extracurricular standardized-exam-only tutoring industry.

Depends on your goals. Some private universities actually leverage in-house admission exams to increase their admission rate. If you prioritize prestige and selectivity, you can tailor the exam content more closely to your competitive program and lower the passing rate.

Yes, and in addition to being a good solution for boutique universities, online proctoring is a strong support for large-volume, rolling admissions. On-demand entrance exam scheduling is best combined with record-and-review proctoring.

No, as far as I am aware at the moment of writing this article, the Common App does not support exams. For exams, you will either need to arrange a testing environment using LMS or use OctoProctor’s SCORM-based solution that does not require creating LMS accounts for candidates.

Foremost, it is unlikely that 2 million people will ever try to take your college admission exam in a single year, unlike the SAT or ACT. Scheduling test sessions will be easier, and remote proctoring will reduce costs for both test takers and institutions. No testing deserts, no retake abuse, no exam slot nightmares, and fewer cheating or exam leak chances if you are using OctoProctor solutions.

Student scores are already with you (their university of choice) – no need to pay College Board to send exam scores to your institution, wait, or set extra funding for expedited processing. Test takers pass the entrance exam, and they are done.